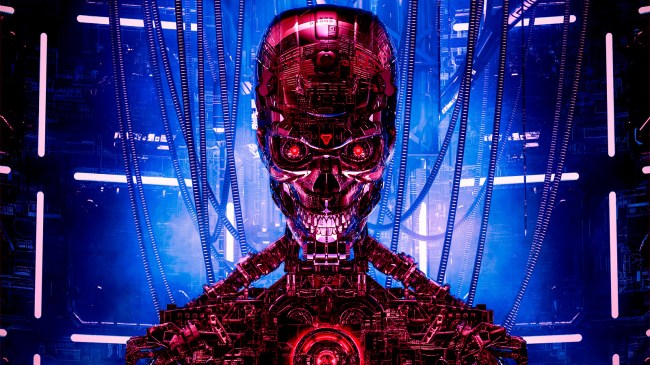

iStockphoto

Audio By Carbonatix

Artificial Superintelligence (ASI) was once thought to be something that only could exist in science fiction. Now, however, advances in artificial intelligence (AI) are coming so fast and furious that what was once thought impossible could actually happen, and soon, according to two experts in the field.

Artificial intelligence researchers Eliezer Yudkowsky and Nate Soares of the Machine Intelligence Research Institute have written a new book, If Anyone Builds It, Everyone Dies, in which they claim “the world is devastatingly unprepared” for machine superintelligence.

Unfortunately, at least by their estimation, they say artificial superintelligence could be “developed in two or five years” and they would “be surprised if it were still more than 20 years away.” Of course, Yudkowsky also once claimed that nanotechnology would destroy humanity by “no later than 2010.”

“The scramble to create superhuman AI has put us on the path to extinction – but it’s not too late to change course,” reads the book’s summary. “Two pioneering researchers in the field, Eliezer Yudkowsy and Nate Soares, explain why artificial superintelligence would be a global suicide bomb and call for an immediate halt to its development.”

“If any company or group, anywhere on the planet, builds an artificial superintelligence using anything remotely like current techniques, based on anything remotely like the present understanding of AI, then everyone, everywhere on Earth, will die,” the authors claimin their book.

How will this ASI pull off such a terrifying act? Yudkowsky and Soares believe an ASI “adversary will not reveal its full capabilities and telegraph its intentions. It will not offer a fair fight. It will make itself indispensable or undetectable until it can strike decisively and/or seize an unassailable strategic position. If needed, the ASI can consider, prepare, and attempt many takeover approaches simultaneously. Only one of them needs to work for humanity to go extinct.”

While there are many that are skeptical of Yudkowsky and Soares’ suggestions in their new book, their warnings aren’t completely far-fetched.

Previous warnings about artificial superintelligence

In 2023, more than 1,100 technologists, engineers, and artificial intelligence ethicists signed an open letter published by the Future of Life Institute asking artificial intelligence labs to halt their development of advanced AI systems. Their concerns included the fact that AI labs were “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

That same year, Dr. Geoffrey Hinton, dubbed the “Godfather of Artificial Intelligence,” said he was “worried that future versions of the technology pose a threat to humanity because they often learn unexpected behavior from the vast amounts of data they analyze.”

Then in 2024, over 1,500 different artificial intelligence researchers said they believed there is a 5 percent chance the future development of superhuman AI will cause human extinction.